NEW YORK — Generative Artificial Intelligence, a type of AI that can create new content like text, images, audio and videos, is now being used by human traffickers to entice and exploit children, an official with the National Center for Missing & Exploited Children has warned.

Speaking at the national launch of global anti-human trafficking organization A21's “Can You See Me?” awareness campaign on Monday, Staca Shehan, vice president at the National Center for Missing & Exploited Children, said last year alone, nearly 5,000 instances of Generative AI were used or attempted to be used to exploit a child.

Use of the technology to exploit children is one of the reasons why the national nonprofit, which aims to help find missing children, reduce child sexual exploitation and prevent child victimization, released its Take It Down tool last year, which helps combat child sexual exploitation by enabling youth to remove sexually explicit images of themselves from the internet, according to the Office of Juvenile Justice and Delinquency Prevention.

“As technology changes and evolves, we want to use it for good, to fill gaps, and that's what we're trying to do with the Take It Down campaign — is to help give back some control to kids in getting the content removed from the internet. But at the same time, we're trying to leverage new and evolving technology,” Shehan said at a press conference held at the Omni Berkshire Place Hotel in Midtown.

“Those that are looking to target children are doing the same, and we're seeing that, especially with generative AI, where last year, we received almost 5,000 reports of instances where generative AI was used or attempted to be used to exploit a child.”

Sheehan then shared chilling examples of how predators have been leveraging the technology to exploit children.

“So with the chat models that are available, we're seeing instances where an offender will enter text and then ask for help, restating that to appear as if they are a child, so that they can engage in communication online with other children and appear to be of similar age with the intent of exploiting them,” Shehan said.

“We're also seeing offenders enter questions asking for guides or tutorials on how to groom or recruit children and do it more efficiently. We're seeing instances where imagery of child sexual abuse material is added to generative AI and asked to create more similar images or alter them in some way, or even a picture that's innocuous that does not include any nudity of a child and remove the clothing of that child and create child sexual abuse material,” she explained.

“We're seeing offenders use these tools in ways to target children, and when these tools are being created without safety by design principles, we're seeing how they're becoming more risky in terms of their ability to exploit children.”

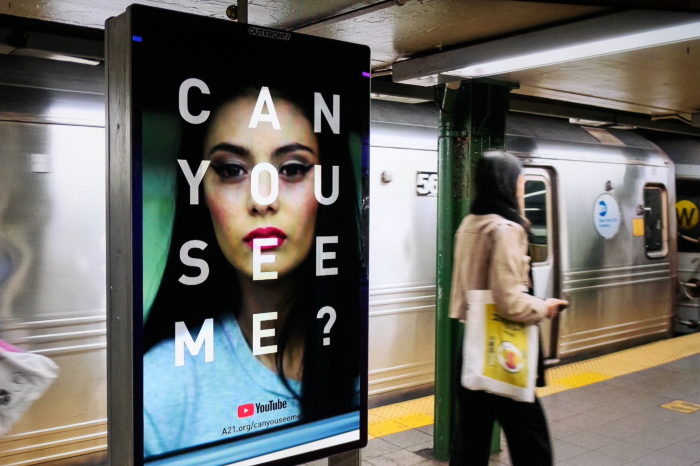

A21's “Can You See Me?” awareness campaign, done in collaboration with the Federal Bureau of Investigation, Homeland Security Investigations New York, the National Center for Missing & Exploited Children, advertising firms LAMAR and Outfront Media, Amtrak and Omni Hotels, seeks to help the general public recognize indicators of human trafficking and report suspected scenarios ahead of the peak holiday travel season. The campaign will feature vivid advertisements on Times Square billboards, transportation hubs, and digital platforms, urging citizens to take action through national hotlines.